Sticky, a company with software

that uses webcams to track users' eye movements to verify whether or not online ads are actually seen, on Thursday released data which supports the argument that viewability does not equal being

seen.

Sticky, a company with software

that uses webcams to track users' eye movements to verify whether or not online ads are actually seen, on Thursday released data which supports the argument that viewability does not equal being

seen.

"Viewability" describes whether or not an ad could be seen on a page without needing to scroll down, "X" out of something, change tabs, etc. While viewability metrics

would suggest that close to 50% of ads are seen, Sticky's data says it's actually just 14%.

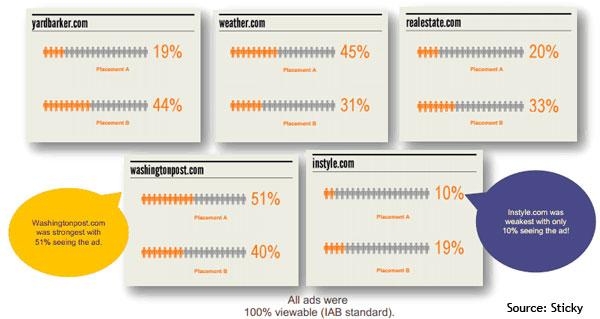

Sticky looked at the performances of ten different brands across five different

sites with two ads per site. The sites used were yardbarker.com, weather.com, realeastate.com, washingtonpost.com and instyle.com. The study dealt only with impressions that were already viewable.

Washingtonpost.com had the strongest performance, with 51% of the ads in "Placement A" being seen. 40% of the ads in washingtonpost.com's "Placement B" were seen.

advertisement

advertisement

Instyle.com had the worst performance. Just 10% of its Placement A and 19% of its Placement B ads were seen.

Weather.com (45% for A, 31% for B) and realestate.com (20%

for A, 33% for B) had noticeable, but not drastic, changes between the performances of Placement A and Placement B. However, yardbarker.com had the biggest difference between the two placements. Its A

ads were seen just 19% of the time, but its B ads were seen 44% of the time.

Jeff Bander, Sticky's president and chief change agent, hopes Sticky's technology will help boost the

number of ads that are actually seen. As publishers get more of this type of data and start to take advantage of it, Bander hopes it will bring more brand dollars online.

"About 94%

of branding dollars are offline," he said. "Habit is a lot of that, but studies say CMOs are asking what it would take to move more dollars online." He added, "Brand lift and purchase intent [numbers]

are always higher when more people see the ad and look at it longer. Those two measurements — of percent seen and time —

have a direct correlation to brand lift, which is the main reason people are advertising brands online."

Right now, the data collection takes about 48 hours, but it will

"eventually be real-time," Bander told RTM Daily. He hopes to have the data collection entirely automated by the end of 2013.