Faced with mounting legal pressure over a tumultuous history regarding teen safety on

its family of apps, Meta is continuing to introduce safety features intended to protect younger users and their families.

The most recent feature focuses on identifying and preventing

sextortion scams.

Meta has launched a new education campaign to tackle sextortion scams and to instruct parents and teens to “take back control” if they are targeted.

The

campaign utilizes creators that the company says are popular with teens, who will promote personalized messages to teens about how to seek help and find resources.

The company is also

partnering with a group of parent creators to help older users understand what sextortion is, how to recognize the signs of a scam, and what steps to take if their teen has been targeted.

advertisement

advertisement

In

terms of new safety features, Meta has provided an additional safeguard for Teen Accounts -- which already have a number of built-in protections for teen users -- by making it harder for accounts

showing signals of “potentially scammy behavior” to request to follow teens via account blocking or spam-labeling.

The company says it will also alert a teen user when they are

chatting with someone who may be in a different country, as sextortion scammers often provide false information about where they live to mislead teens.

In July, Meta said it removed over 60k

accounts linked to sextortion scams on Instagram, all of which were based in Nigeria. However, the company added that the perpetrators “targeted primarily adult men in the US.”

To

help cut scammers off from targeting potential victims, Meta is also removing “scammy” accounts' ability to see lists of accounts that have "liked" someone’s post, photos they have

been tagged in, or other accounts that have been tagged in their photos.

And soon, Meta says it will no longer allow users to directly screenshot or screen record ephemeral images or videos

sent in private messages, and will remove the “view once” or “allow replay” options for images or videos on the app's web version.

The tech giant launched its first major expansion of sextortion safety features last spring -- two

months after CEO Mark Zuckerberg was forced by U.S. lawmakers to apologize to parents who had lost children to sexploitation on Meta's apps. Some advocates for child safety criticized the company's

apps, highlighting the company's failures to protect children from predators and sexual exploitation on their apps.

However, despite the introduction of more tools to help protect younger users from falling victim to various forms of

intimate image abuse and predatory behavior, teens are receiving recommendations for sexually explicit content.

A recent report by The Wall Street Journal found that Instagram was recommending

sexual content in the form of Reels to 13-year-old users who appeared interested in “racy content.”

The company says it is now rolling out tools that it first announced last

spring, which may prove to have greater impact in the coming months.

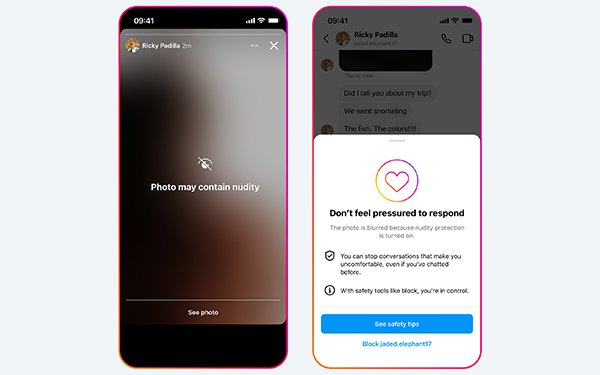

This includes Meta's nudity protection feature in Instagram DMs, which is designed to automatically blur images that

contain nudity for users under 18.